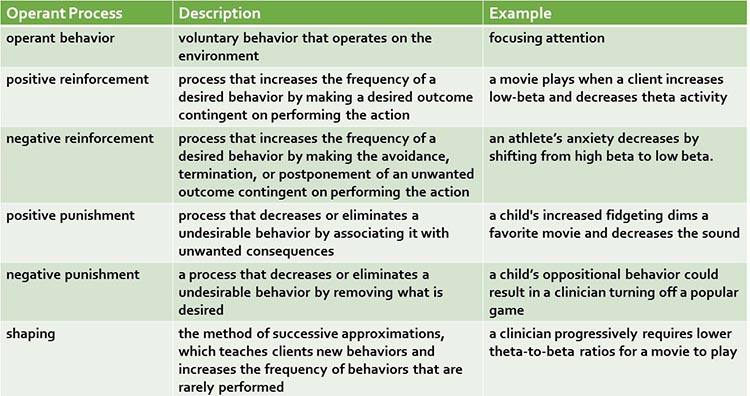

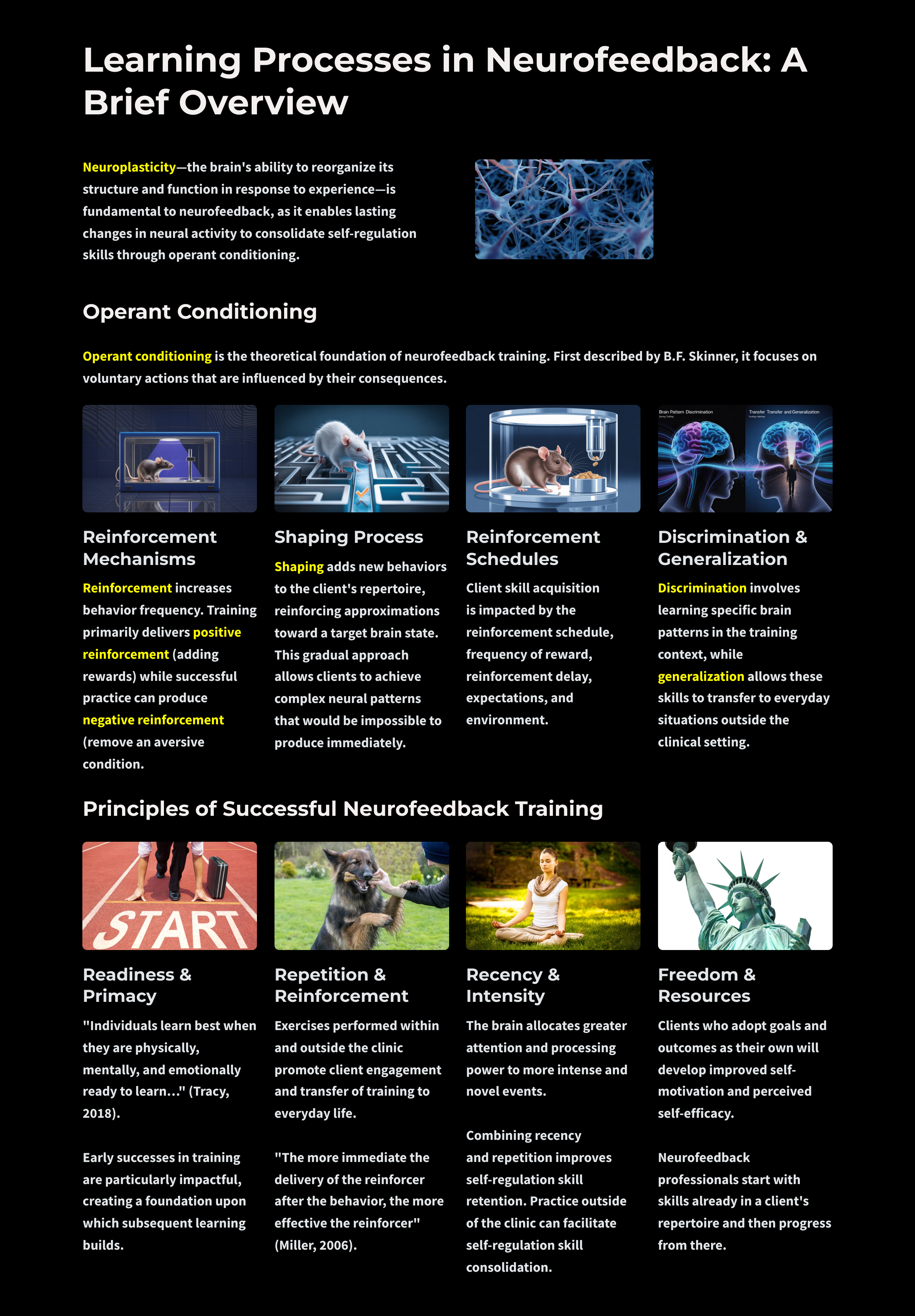

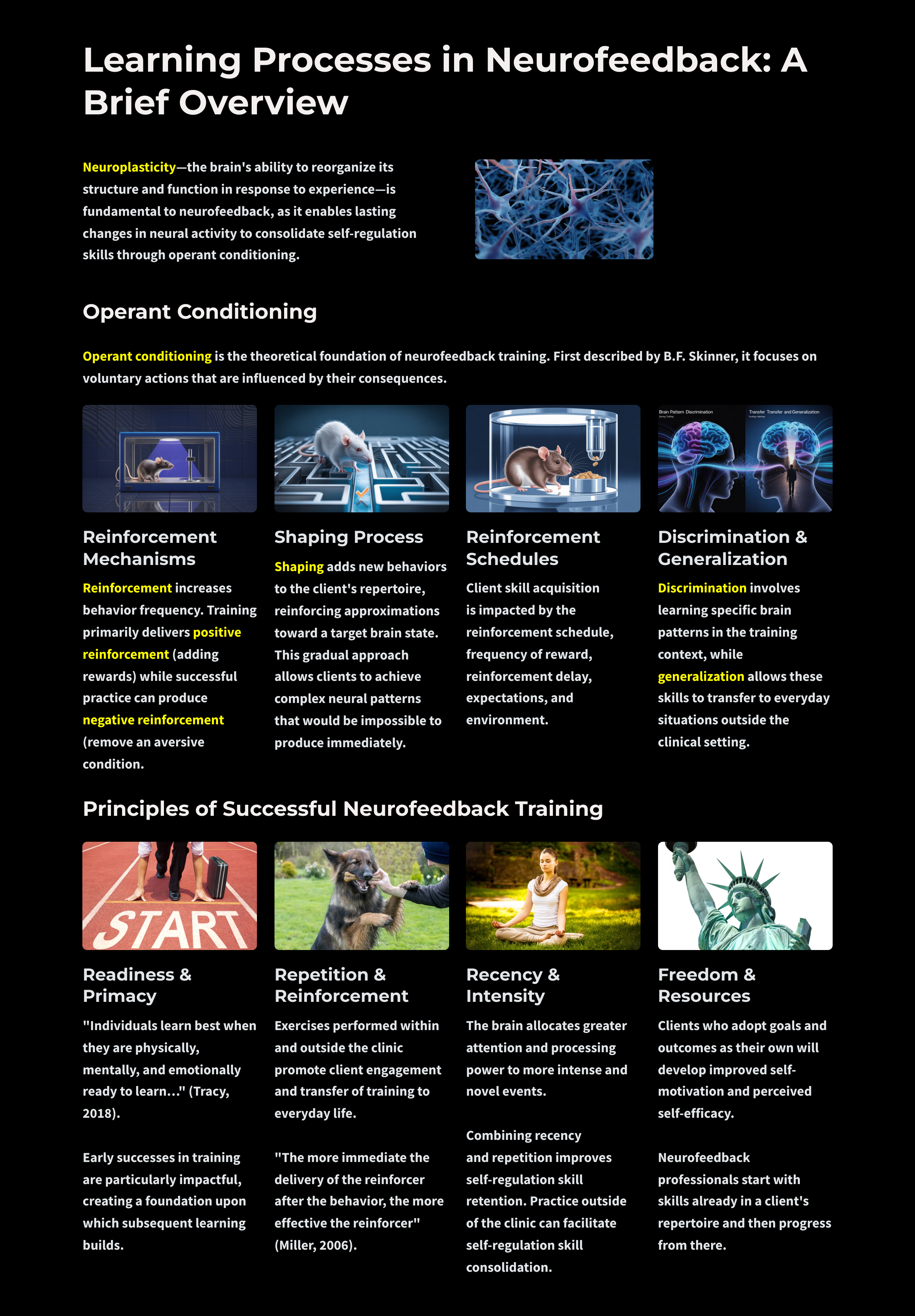

Neurofeedback teaches self-regulation of neuronal activity and related "state changes." Neurofeedback training involves several learning processes, including operant conditioning and observational learning. Neurofeedback software incorporates powerful operant principles by selectively presenting visual, auditory, and tactile reinforcing stimuli.

A movie plays when a child increases low beta and decreases theta activity (positive reinforcement). A score counter stops reversing when a child focuses on a reading selection after several minutes of distraction (negative reinforcement). The training goal becomes progressively more demanding as the child succeeds (shaping). Graphic © Roman Ziets/Shutterstock.com.

Sherlin et al. (2011) emphasized the importance of learning theory in neurofeedback: "It is our contention that future applications in clinical work, research, and development should not stray from the already-demonstrated basic principles of learning theory until empirical evidence demonstrates otherwise." (p. 292)

BCIA Blueprint Coverage

This unit covers

I. Orientation to Neurofeedback - C. Overview of Principles of Human Learning as They Apply to Neurofeedback.

This unit covers: Three Main Types of Learning, Classical Conditioning, Operant Conditioning, Nonassociative Learning, Observational Learning, and Critical Elements in Neurofeedback Training.

Please click on the podcast icon below to hear a full-length lecture.

We can divide learning into three main categories: associative, nonassociative, and observational. Associative learning and observational learning are most relevant to neurofeedback. In

associative learning, we create connections between stimuli, behaviors, or stimuli and behaviors. Associative learning aids our survival since it allows us to predict future events based on our experience. Learning that B follows A provides us with time to prepare for B. Two forms of associative learning are classical and operant conditioning (Cacioppo & Freberg, 2016).

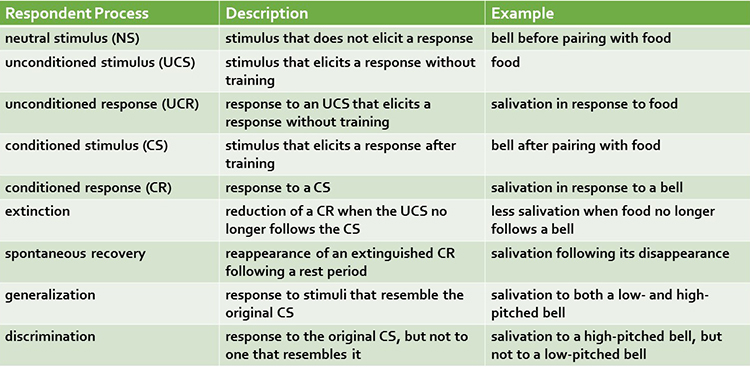

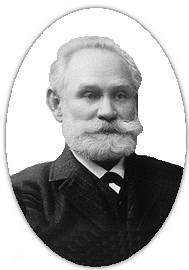

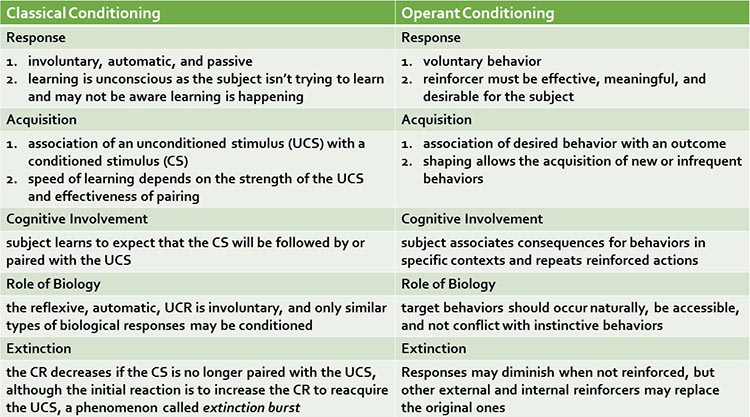

Classical Conditioning

Pavlov demonstrated classical conditioning in 1927 based on his famous research with dogs. Due to faulty English translations, we now use the adjectives

conditioned and

unconditioned instead of

conditional and

unconditional. We will use the current terminology to minimize confusion.

Classical conditioning

Classical conditioning (also known as respondent conditioning) is an

unconscious associative learning process that builds connections between paired stimuli that follow each other in time.

Through this learning process, a dog in Pavlov's laboratory learned that if A (a ringing bell) occurs, B (food) will follow. Predicting the future from experience is crucial to our survival since it gives us time to prepare.

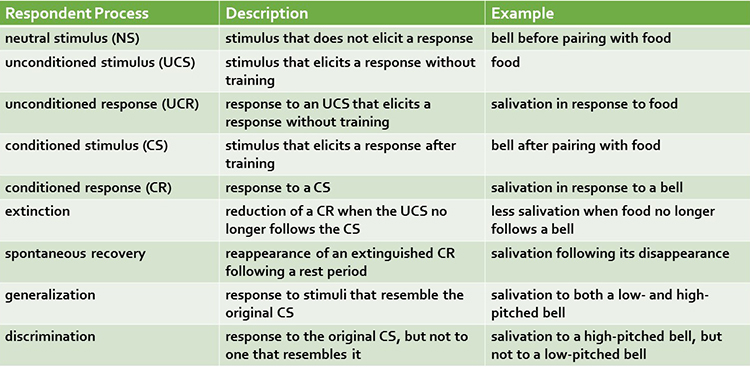

Before learning to anticipate food in Pavlov's laboratory, dogs salivated (unconditioned response) when they saw food (unconditioned stimulus). An

unconditioned response (UCR) follows an

unconditioned stimulus (UCS) before conditioning. However, until the dogs learned to associate the sound of a bell with food delivery, the bell was a

neutral stimulus (NS) that did not elicit salivation.

Through the repeated pairing of the bell with the arrival of food, Pavlov's dogs learned that the bell reliably signaled the arrival of food. The bell became a

conditioned stimulus (CS) that elicited the

conditioned response (CR) of salivation.

Graphic © VectorMine/iStockphoto.com.

When the association between the CS and CR is disrupted because B no longer consistently follows A, the frequency of the CR may decline, or the CR may disappear. This phenomenon is called

extinction. In Pavlov's situation, repeated trials where food did not follow bell ringing reduced or eliminated salivation. The extinction of CRs allows us to adapt to changes in our experience. You learn to use passwords less predictable than "password" after becoming a victim of identity theft. You can learn to play with dogs, again, after you were bitten as a child.

Pavlov argued that extinction is not forgetting but evidence of new learning that overrides previous learning. The phenomenon of

spontaneous recovery, where the CR (salivation) reappears

after some time without exposure to the CS

(bell), supports his position. Dogs who stopped salivating by the end of an extinction trial in which no food followed the bell resumed salivating during a break or the next session.

Generalization and discrimination are mirror images of each other. In

generalization, the conditioned response is elicited by stimuli (low-pitched bell) that resemble the

original conditioned stimulus (high-pitched bell). Generalization promotes our survival because it allows us to apply what we have learned about one stimulus (lions) to similar predators (tigers) without experiencing them.

In contrast, in

discrimination,

the conditioned response (salivation) is elicited by one

stimulus (high-pitched bell) but not another (low-pitched bell). When soldiers return to civilian life, they must distinguish between a CS that signals danger (gunfire) and one that is benign (fireworks). Discrimination is often impaired in soldiers diagnosed with post-traumatic stress disorder (PTSD).

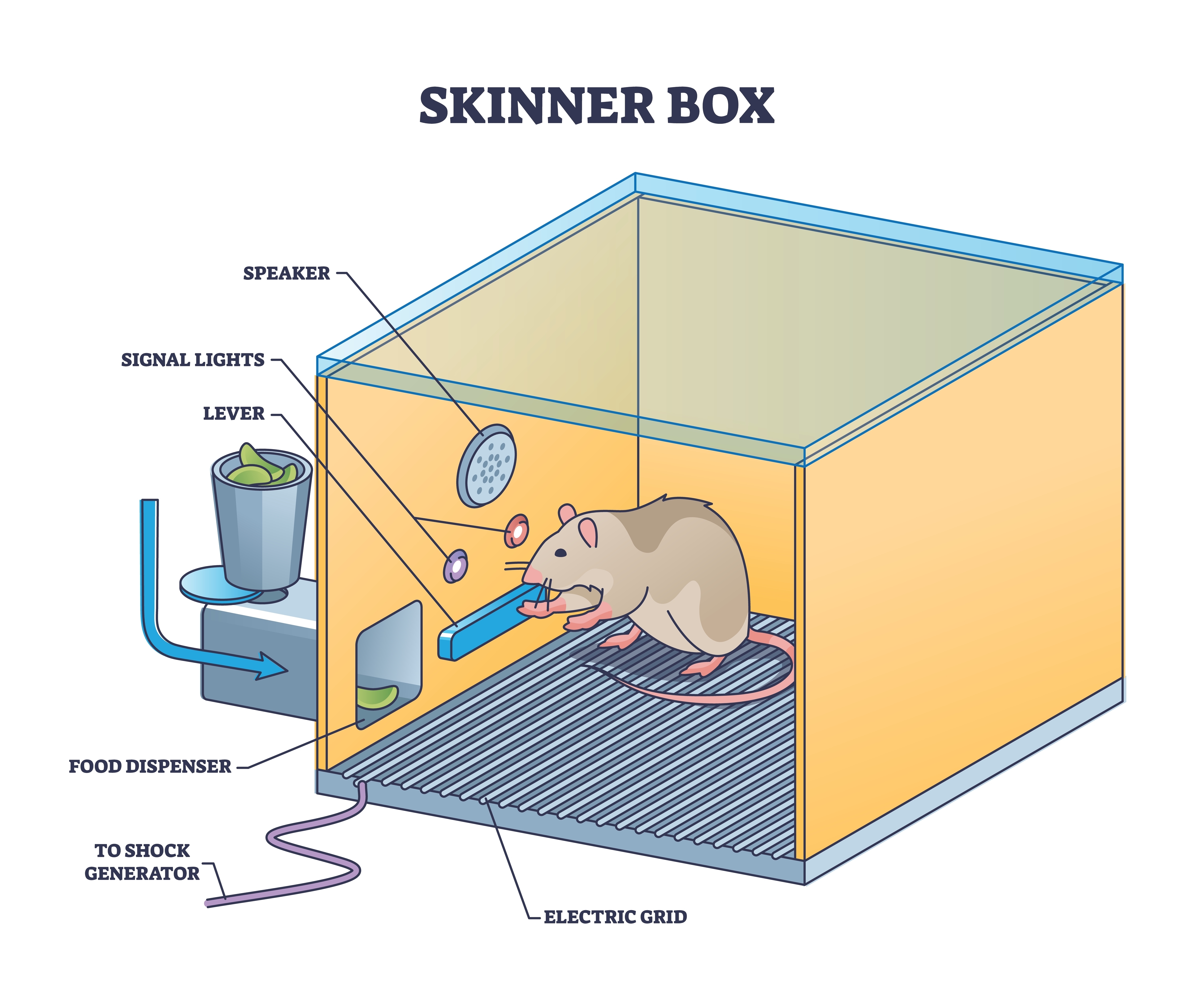

Operant Conditioning

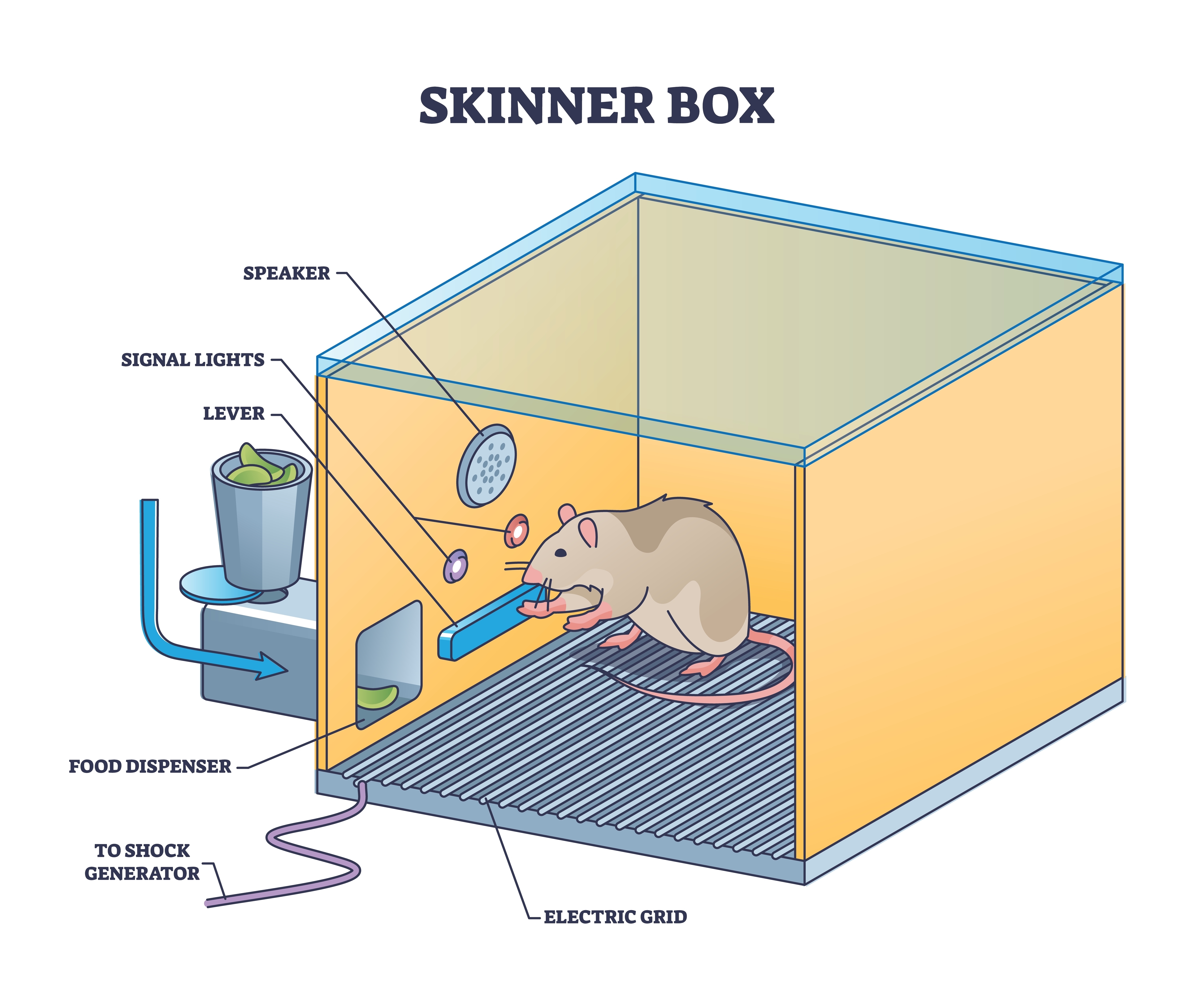

Skinner box graphic © VectorMine/shutterstock.com.

Edward Thorndike's

Edward Thorndike's (1913)

law of effect proposed that the consequences of behavior determine its addition to your behavioral repertoire. Cats learned to escape his "puzzle boxes" by repeating successful actions and eliminating unsuccessful ones from his perspective.

Operant conditioning is an

unconscious associative learning process that modifies an

operant behavior, voluntary behavior that operates on the environment to produce an outcome by manipulating its

consequences (Miltenberger, 2016).

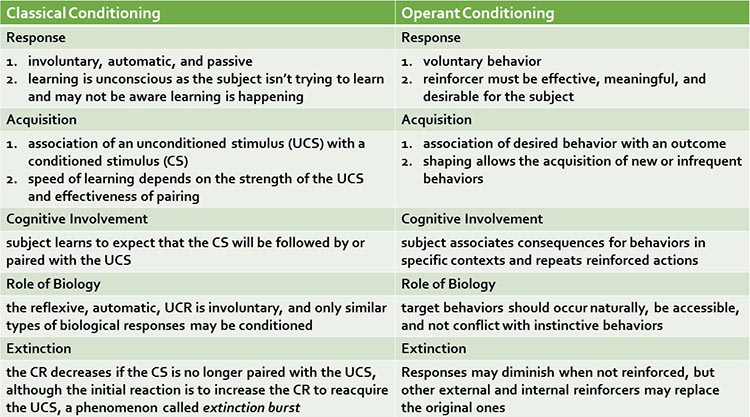

Operant conditioning differs from

classical conditioning in several respects. Where operant conditioning teaches the association of a voluntary behavior with its consequences, classical conditioning teaches the predictive relationship between two stimuli to modify involuntary behavior.

Neurofeedback teaches self-regulation of neural activity and related "state changes" using operant conditioning via the selective presentation of reinforcing stimuli, including visual, auditory, and tactile displays.

Operant conditioning occurs with a situational context. The identifying characteristics of a situation are called

its

discriminative stimuli and can include

the physical environment and physical, cognitive, and emotional cues. Discriminative stimuli teach us when to

perform operant behaviors.

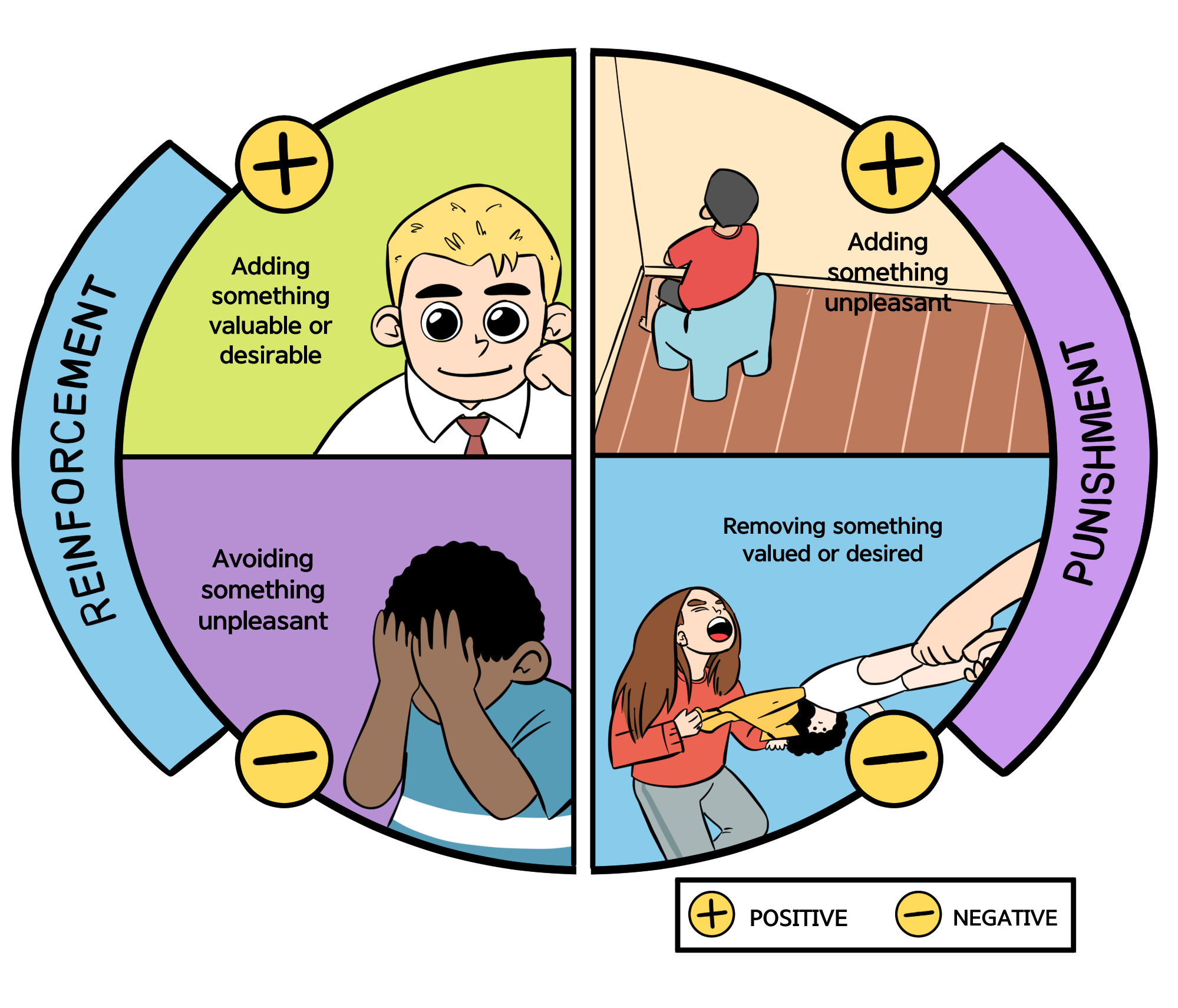

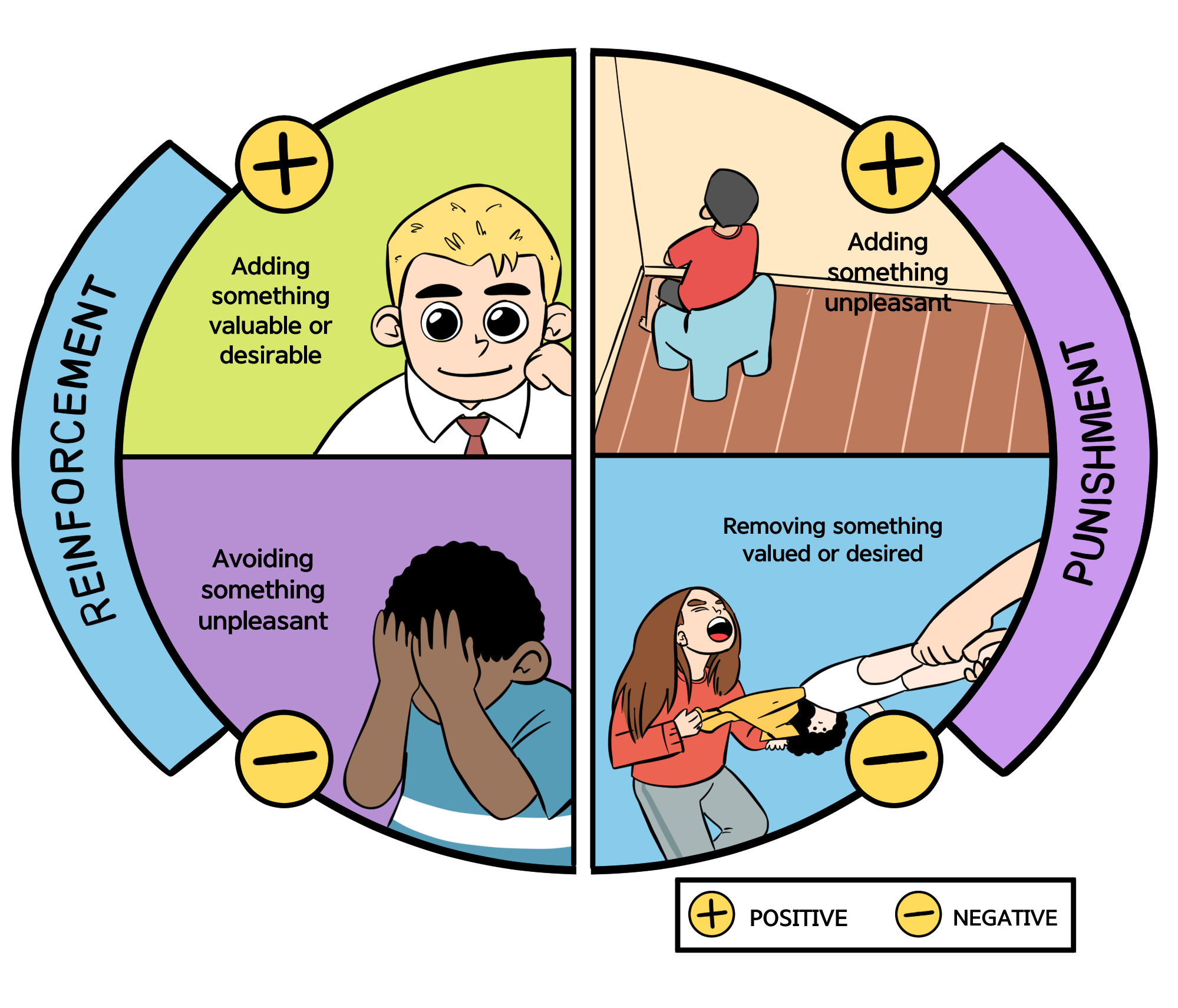

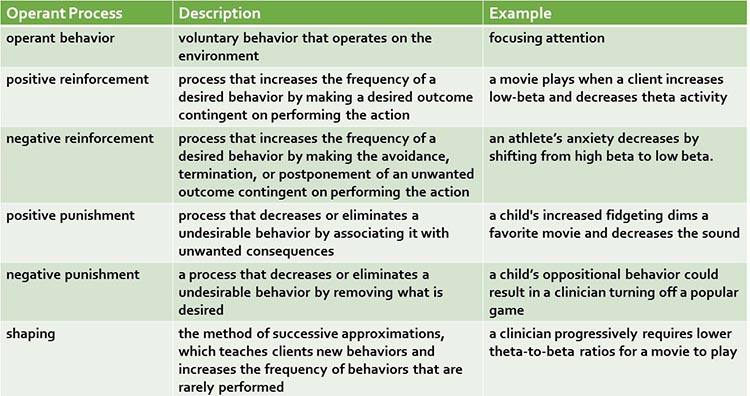

The consequences of operant behaviors can increase or decrease their frequency. Skinner proposed four types of consequences: positive reinforcement, negative reinforcement, positive punishment, and negative punishment. Where positive and negative reinforcement increase behavior, positive and negative punishment decrease it.

Due to individual differences, we cannot know in advance whether a consequence will be reinforcing or punishing since these are not intrinsic properties of a consequence. We can only determine whether a consequence is reinforcing or punishing by measuring how it affects the preceding behavior. In neurofeedback, a movie that motivates the best performance might reinforce the client, regardless of the therapist's personal preference.

Positive reinforcement increases

the frequency of a desired behavior by making a desired outcome contingent on performing the action. For example, a movie plays when a client diagnosed with attention deficit hyperactivity disorder (ADHD) increases low-beta and decreases theta activity.

Negative reinforcement increases the frequency of a desired behavior by making the avoidance, termination, or postponement of an unwanted outcome contingent on performing the action. For example, an athlete's anxiety decreases by shifting from high beta to low beta.

Positive punishment decreases or eliminates an undesirable behavior by associating it with unwanted consequences. For example, a child's increased fidgeting dims a favorite movie and lowers the sound.

Negative punishment

decreases or eliminates an undesirable behavior by removing what is desired. For example, oppositional behavior could result in a clinician turning off a popular game.

Reinforcement Criteria

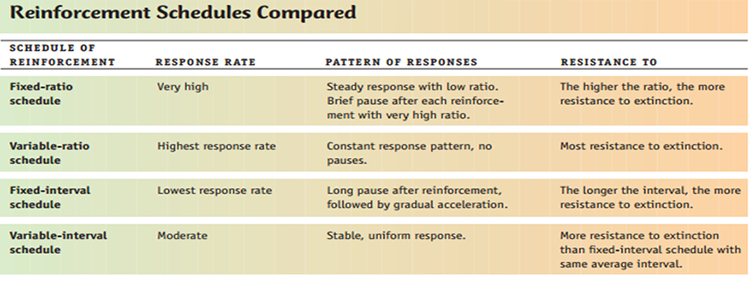

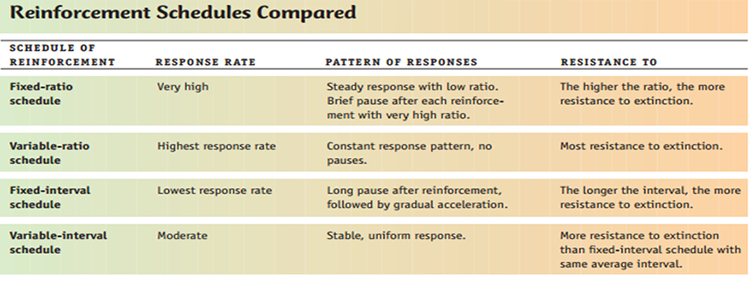

Current research is exploring the optimal reinforcement criteria for neurofeedback training. Client skill acquisition is markedly affected by changing parameters like reinforcement schedule, frequency of reward, reinforcement delay, conflicting reinforcements, conflicting expectations, and environment alteration.

While

continuous reinforcement, reinforcement of every desired behavior, is helpful during the early stage of skill acquisition, it is impractical as clients attempt to transfer the skill to real-world settings. Since reinforcement outside of the clinic is intermittent,

partial reinforcement schedules, where the desired behavior is only reinforced some of the time, are important as training progresses. This reduces the risk of

extinction, where failure to reinforce the desired behavior reduces the frequency of that behavior.

For neurofeedback,

variable reinforcement schedules, where reinforcement occurs after a variable number of responses (variable ratio) or following a variable duration of time (variable interval) produce superior response rates than their fixed counterparts.

Sherlin et al. (2011) emphasized the importance of professionals understanding targeted brain rhythms and the underlying EEG neurophysiology. For example, the sensorimotor rhythm (SMR) consists of spindles with at least a 0.25-second duration. Use of criteria like "time above threshold" or "duration of sustained reward" tailors feedback to the SMR rhythm. It results in superior outcomes compared to reinforcing any shift above an SMR amplitude threshold.

Sherlin et al. (2011) advised that: "For operant conditioning, it is very important to be aware of specifically 'what behavior' is being conditioned in order to achieve learning and to improve the specificity" (p. 300).

This is especially important in the context of

EEG artifacts, which are false signals that masquerade as brain electrical activity. The EEG is vulnerable to diverse biological (electrocardiogram, eye movement, respiratory, skeletal muscle) and environmental (50/60-Hz, radiofrequency) artifacts. Neurofeedback systems should provide real-time artifact control. Clinicians should carefully monitor training to prevent "artifact-driven feedback." We don't want to inadvertently reward changes in the frequency of eye blinks or the degree of frontalis muscle contraction. Control of artifact avoids reinforcing a coincidental response and increases training specificity and success (Sherlin et al., 2011).

Shaping

Shaping, the method of successive approximations, teaches clients new behaviors and increases the frequency of rarely performed behaviors. A clinician starts by reinforcing spontaneous voluntary behaviors that resemble the desired behavior and then progressively raises the criteria for reinforcement to achieve the training goal. For example, the clinician can gradually require lower theta-to-beta ratios for a movie to play.

Sherlin et al. (2011) warn that shaping should not be performed using auto-thresholding because this approach rewards transient responses in the right direction, whether or not this shift involves an absolute change from baseline. Auto-thresholding can deliver reinforcement when clients are not producing the desired behavior and even when they are producing the opposite behavior. Finally, they observe that shaping is not applicable to slow cortical potential (SCP) neurofeedback since there are no normative values to shape towards; only the direction of deviation from baseline (positive or negative) is critical to training success.

Discrimination and Generalization

Discrimination and generalization are the ultimate goals of neurofeedback training.

Discrimination teaches when the desired behavior will be reinforced. The initial discriminative stimuli include the cues provided by the training environment, like animations and tones. A clinician may introduce a stressor following successful skill acquisition to "raise the bar." Now, the stressor serves as another discriminative stimulus for performing the desired behavior. Discrimination teaches clients to identify and disarm symptom triggers instead of unconsciously reacting to them in everyday life.

Generalization teaches the transfer of the desired behavior to multiple environments and in response to diverse stressors. This enables a client to respond adaptively to novel and unanticipated triggering stimuli. While the ability to perform the learned response in many situations contributes to flexibility, it is not always advantageous. Based on an understanding of set and setting, discrimination helps determine when a response is required and which response is appropriate.

Check out the TED-Ed video,

The Difference Between Classical and Operant Conditioning.

Nonassociative Learning

Nonassociative learning is a

simple learning process in which the strength of the response to a stimulus changes with repeated exposure.

Habituation and sensitization are nonassociative learning processes.

In

habituation, our response to an unchanging harmless stimulus weakens with repeated exposure. This process can interfere with the transfer of training to the environment. Clients can quickly tune out reminders (colored dots) to practice self-regulation skills. One strategy to counter habituation is to randomly change the color and shape of these prompts.

In

sensitization, our response to a range of stimuli strengthens following exposure to a single powerful stimulus. For example, following a traumatic experience (earthquake), your response to movement or noise might be exaggerated.

Graphic © maroke/Shutterstock.com.

Observational Learning

Observational learning allows us to rapidly modify an existing skill or acquire a new one by observing others. This social learning process is highly efficient because it bypasses trial-and-error learning. We don't personally experience negative outcomes since others have done it for us.

Observational learning is interactive. For example, we listen to a teacher play a violin passage, we attempt to play the same notes, and then compare the two performances. We play the passage, again and again, until we "get it right." Feedback allows us to refine our playing until we match the original sample. Graphic © Art_Photo/shutterstock.com.

Critical Elements in Neurofeedback Training

Successful neurofeedback training incorporates readiness, repetition (exercise), reinforcement (effect), primacy, recency, intensity, freedom, and resources (requirement).

Readiness

Readiness means preparation for training and involves concentration and enthusiasm. "Individuals learn best when they are physically, mentally, and emotionally ready to learn, and do not learn well if they see no reason for learning" (Tracy, 2018). Graphic © Tom Wang/Shutterstock.com.

This requires adequate rest and nutrition and a positive and supportive environment. Client education that explains the training process clearly defines goals and outcomes and links training to personally relevant benefits enhances readiness.

In contrast, poor client motivation, lack of understanding of potential benefits, and biopsychosocial impediments can impair readiness.

Significant biopsychosocial constraints include a chaotic, disruptive, or traumatic environment, inadequate rest and nutrition, developmental delays, lack of resources, and traumatic brain injury or other medical problems.

Repetition (Exercise)

Exercises performed within and outside the clinic promote client engagement and transfer of training to everyday life. Successful training recognizes brain plasticity and the physiological requirements for skill acquisition and consolidation. Practice periods should range from 10-30 minutes and include a minimum of 20 repetitions. Graphic © PH888/Shutterstock.com.

Reinforcement (Effect)

Rewards must be personally desirable to the client. Clinicians can confirm this by observing the impact of reinforcers on engagement, motivation, and performance. Intrinsically reinforcing outcomes accelerate the development of mastery and improve retention. Clear evidence of success builds confidence and reduces frustration. Encouragement by staff and family is essential to sustain progress as a client experiences difficulty.

Sherlin et al. (2011) speculated that secondary reinforcement for achievement during neurofeedback (monetary rewards or points that can be redeemed for a prize) might accelerate learning self-regulation skills. They cautioned that secondary reinforcement should be delivered for EEG changes and not simple attendance.

Reinforcement must occur "immediately" to ensure that the brain correctly pairs this consequence with the desired behavior that preceded it. Early studies determined that the optimal delay between an operant behavior and reinforcement is less than 250 to 350 ms (Felsinger & Gladstone, 1947; Grice, 1948). The faster the delivery of reinforcement following the desired behavior, the less time required for skill acquisition. This means that shorter EEG filter response times are better (Sherlin et al., 2011, p. 298),

"The more immediate the delivery of the reinforcer after the behavior, the more effective the reinforcer" (Miller, 2006). (p. 233) Graphic © Nicky Rhodes/Shutterstock.com.

Egner and Sterman (2006) cautioned that neurofeedback training "should stress exercise rather than entertainment" because complex games might prevent clients from linking brain responses to reinforcement due to distraction produced by more salient game stimuli.

Primacy

Clinicians should approach neurofeedback training like a contractor building a house: start with a strong foundation. Graphic © Mary Rice/Shutterstock.com.

Client education should present the core principles that will set the foundation for future learning. Like home construction, skill acquisition should proceed in a stepwise fashion that builds upon previous experience. The successful acquisition of initial skills builds confidence and aids the mastery of more complex skills.

Recency

Clients more readily recall material that is learned most recently. The combination of recency and repetition improves the retention of self-regulation skills. The frequency of training sessions impacts learning since greater frequency reinforces skill acquisition as the pairing with skill practice and reinforcement occurs more recently. Graphic © Leah-Anne Thompson/Shutterstock.com.

Intensity

The brain is a difference and intensity detector. The brain allocates greater attention and processing power to more intense and novel events. This suggests that adjunctive experiential exercises can facilitate self-regulation skill retention. Graphic © Solis Images/Shutterstock.com.

Freedom

Active, willing participation facilitates learning, while coercion, compulsion, and forced participation inhibit it. Reward systems are only effective when they encourage active and engaged participation. Clients who adopt goals and outcomes as their own will develop improved self-motivation and perceived self-efficacy. Graphic © UbjsP/Shutterstock.com.

Resources (Requirement)

Neurofeedback professionals start with skills already in a client's repertoire and then progress from there. For example, heart rate variability biofeedback builds on a client's existing breathing skills and then shapes its mechanics and rate. Shaping, the gradual reinforcement of successive approximations of a target behavior, adds new skills to a client's repertoire. Graphic © Robert Kneschke/Shutterstock.com.

Olton and Noonberg (1980) proposed that performance goals should be raised when a client succeeds more than 70% of the time and lowered when a client succeeds less than 30% of the time. Neurofeedback systems incorporate algorithms that automatically revise goals based on the client's performance (e.g., time above threshold) to maintain motivation and ensure sufficient challenge.

Glossary

classical conditioning: an unconscious associative learning process that builds connections between paired stimuli that follow each other in time.

conditioned response (CR): in classical conditioning, a response to a conditioned stimulus (CS). For example, salivation in response to a bell.

conditioned stimulus (CS): in classical conditioning, a stimulus that elicits a response after training. For example, a bell after pairing with food.

discrimination (classical conditioning): a response to the original CS, but not to one that resembles it. For example, salivation to a high-pitched bell, but not to a low-pitched bell.

discrimination (operant conditioning): the performance of the desired behavior in one context, but not another. For example, increasing sensorimotor rhythm (SMR) activity at bedtime, but not during a morning commute.

discriminative stimuli: in operant conditioning, the identifying

characteristics of a situation (the physical environment and physical, cognitive, and emotional cues) that teach

us when to perform operant behaviors. For example, a traffic slowdown could signal a client to practice effortless

breathing.

EEG artifacts: false signals that masquerade as brain electrical activity.

extinction (classical conditioning): reducing a CR when the UCS no longer follows the CS. For example, less salivation when food no longer follows a bell.

extinction (operant conditioning): a reduction in response frequency when the desired behavior is no longer reinforced. For example, a client practices less when the clinician ceases to praise this behavior.

generalization (classical conditioning): response to stimuli that resemble the original CS. For example, salivation to both a low- and high-pitched bell.

generalization (operant conditioning): the performance of the desired behavior in multiple contexts. For example, increasing low-beta activity during both classroom lecture and golf practice.

habituation: a nonassociative learning phenomenon in which responses to unchanging and harmless stimuli decrease.

learning: the process by which we acquire new information, patterns of behavior, or skills.

negative punishment: in operant conditioning, learning by observing others. For example, a child’s oppositional behavior could result in a clinician turning off a popular game.

negative reinforcement: in operant conditioning, a process that increases the frequency of the desired behavior by making the avoidance, termination, or postponement of an unwanted outcome contingent on acting. For example, an athlete’s anxiety decreases by shifting from high beta to low beta, rewarding this self-regulation.

neutral stimulus (NS): in classical conditioning, a stimulus that does not elicit a response. For example, a bell before pairing with food.

nonassociative learning: a simple learning process in which the strength of the response to a stimulus changes with repeated exposure.

observational learning: learning by observing others. For example,

a fitness client learns to stretch by watching a personal trainer demonstrate the technique.

operant conditioning: an

unconscious associative learning process that modifies an operant behavior, voluntary behavior that operates on the environment to produce an outcome by manipulating its

consequences.

positive punishment: in operant conditioning, a process that decreases or eliminates an undesirable behavior by associating it with unwanted consequences. For example, a child's increased fidgeting dims a favorite movie and lowers the sound.

positive reinforcement: in operant conditioning, a process that decreases or eliminates an undesirable behavior by associating it with unwanted consequences. For example, a movie plays when a client increases low-beta and decreases theta activity.

sensitization: a nonassociative learning phenomenon in which there is an exaggerated reaction to diverse stimuli following exposure to a single powerful stimulus.

spontaneous recovery: in classical conditioning, the reappearance of an extinguished CR following a rest period. For example, salivation following its disappearance.

stimulus discrimination: in classical conditioning, when a conditioned

response (CR) is elicited by one conditioned stimulus (CS) but not by another. For example, your blood pressure

increases during a painful dental procedure but not during an uncomfortable blood draw.

stimulus generalization: in classical conditioning, when stimuli that

resemble a conditioned stimulus (CS) elicit the same conditioned response (CR). For example, when your blood

pressure increases during a painful dental procedure and an uncomfortable blood draw.

unconditioned response (UCR): in classical conditioning, a response to an UCS that elicits a response without training. For example, salivation in response to food.

unconditioned stimulus (UCS): in classical conditioning, a stimulus that elicits a response without training. For example, food.

TEST YOURSELF ON CLASSMARKER

Click on the ClassMarker logo below to take a 10-question exam over this entire unit.

REVIEW FLASHCARDS ON QUIZLET

Click on the Quizlet logo to review our chapter flashcards.

Visit the BioSource Software Website

BioSource Software offers

Physiological Psychology, which satisfies BCIA's Physiological Psychology requirement, and

Neurofeedback100, which provides extensive multiple-choice testing over the Biofeedback Blueprint.

Assignment

Now that you have completed this unit, which sounds do you prefer when you have succeeded during neurofeedback training? Which visual displays are more motivating for you?

References

Albino, R., & Burnand, G. (1964). Conditioning of the alpha rhythm in man.

Journal of Experimental Psychology, 67(6), 539-544. https://doi.org/10.1037/h0042695

Cacioppo, J. T., & Freberg, L. A. (2016).

Discovering psychology (2nd ed.). Cengage Learning.

Clemente, C. D., Sterman, M. B., & Wyrwicka, W. (1964). Post-reinforcement EEG synchronization during alimentary behavior.

Electroencephalography Clinical Neurophysiology, 355-365. https://doi.org/10.1016/0013-4694(64)90069-0

Cooper, J., Heron, T., & Heward, W. (2019).

Applied behavior analysis (3rd ed.). Pearson Education.

Durup, G., & Fessard, A. I. (1935). Blocking of the alpha rhythm,

L'ann'ee Psychologique, 36(1), 1-32.

Felsinger, J. M., & Gladstone, A. I. (1947). Reaction latency (StR) as a function of the number of reinforcements (N).

Journal of Experimental Psychology, 37(3), 214-228. https://doi.org/10.1037/h0055587

Grice, G. R. (1948). The acquisition of a visual discrimination habit following response to a single stimulus.

Journal of Experimental Psychology, 38(6), 633-642. https://doi.org/10.1037/h0056158

Jasper, H., & Shagass, C. (1941). Conditioning the occipital alpha rhythm in man.

Journal of Experimental Psychology, 28(5), 373-387. https://doi.org/10.1037/h0056139

Kamiya, J. (2011). The first communications about operant conditioning of the EEG.

Journal of Neurotherapy, 15(1), 65-73. https://doi.org/10.1080/10874208.2011.545764

Knott, J. R. (1941). Electroencephalography and physiological psychology: Evaluation and statement of problem.

Psychological Bulletin, 38(10), 944-975. https://doi.org/10.1037/h0059465

Loomis, A. L., Harvey, E. N., & Hobart, G. (1936). Electrical potentials of the human brain.

Journal of Experimental Psychology, 19, 249. https://doi.org/10.1037/h0062089

Malott, R. W., & Shane, J. T. (2013).

Principles of behavior (7th ed.). Pearson Prentice Hall.

Miller, L. K. (2006).

Principles of everyday behavior analysis (4th ed.). Wadsworth,

Miltenberger,

R. G. (2016).

Behavior modification: Principles and procedures. Cengage Learning.

Olton, D. S., & Noonberg, A. R. (1980).

Biofeedback: Clinical applications in behavioral medicine. Prentice-Hall, Inc.

Pavlov, I. P. (1927).

Conditioned reflexes. Oxford University Press.

Sherlin, L. H., Arns, M., Lubar, J., Heinrich, H., Kerson, C., Strehl, U., & Sterman, M. B. (2011).

Neurofeedback and basic learning theory: Implications for research and practice.

Journal of Neurotherapy, 15, 202-304. doi:10.1080/10874208.2011.623089

Sterman, M. B., LoPresti, R. W., & Fairchild, M. D. (1969). Electroencephalographic and behavioral studies of monomethyl hydrazine toxicity in the cat.

Technical Report AMRL-TR-69-3, Wright-Patterson Air Force Base, Ohio, Air Systems Command.

Thorndike, E. L. (1913).

Educational psychology: Briefer course. Teachers College.

Tracy, M. (2018). Personal communication regarding principles of learning.

Travis, L. E., & Egan, J. P. (1938). Conditioning of the electrical response of the cortex.

Journal of Experimental Psychology, 22(6), 524-531. https://doi.org/10.1037/h0056243

Wyrwicka, W., & Sterman, M. B. (1968). Instrumental conditioning of sensorimotor cortex EEG spindles in the waking cat.

Physiology and Behavior, 3(5), 703-707. https://doi.org/10.1016/0031-9384(68)90139-X